Rung-Ching Chen1*, Christine Dewi1,3, Wei-Wei Zhang1,2, Jia-Ming Liu1, Su-Wen Huang1,4* 1 Department of Information Management, Chaoyang University of Technology, Taiwan, R.O.C.

2 Ningxia University, Xixia District, Yinchuan City, Ningxia

3 Information Technology, Satya Wacana Christian University, Central Java, Indonesia.

4 Taichung Veterans General Hospital, Taiwan, R.O.C.

Download Citation:

|

Download PDF

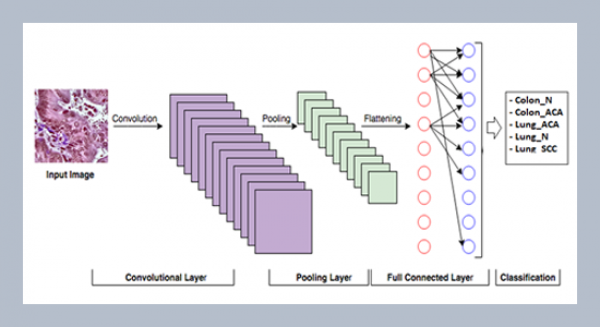

Due to the rise of the Internet of Things, more devices can connect with the Internet. A large amount of data is collected from devices that could be used for different applications. The development of hardware equipment for the Internet of Things not only use for industrial but also for smart homes. The smart home covers broad topics, including remote control of home applications, sensing of humans, temperature-controlling air conditions, and security monitors. When we carry out these topics, the human-machine interface is essential for system applications. A gesture recognition system is applied to many real applications. The reason is that the accuracy rate and real factors are complicated. They commonly use of gesture control service in the market is the sensor board of gesture control. The principle is using the electric field to change and determine the gestures. The limitation requires the close operation, and there is a problem of critical point sensitivity. In this paper, we use the gesture control board to combine with gesture image recognition methods to perform the double authentication gesture recognition. Raspberry Pi is the control center to integrate the intelligent light bulb. HUE makes a gesture recognition system. The results explain that the accuracy rate of the gesture recognition proposed is 90%. Meanwhile, it is higher than the SVM method.ABSTRACT

Keywords:

Raspberry Pi, Gesture recognition, Deep learning.

Share this article with your colleagues

REFERENCES

ARTICLE INFORMATION

Received:

2020-03-29

Revised:

2020-06-06

Accepted:

2020-07-28

Available Online:

2020-09-01

Chen, R.C., Dewi, C., Zhang, W.W., Liu, J.M., Huang, S.W. 2020. Integrating gesture control board and image recognition for gesture recognition based on deep learning. International Journal of Applied Science and Engineering, 17, 237–248. https://doi.org/10.6703/IJASE.202009_17(3).237

Cite this article:

Copyright The Author(s). This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are cited.